Videos

See AI2's full collection of videos on our YouTube channel.Viewing 231-240 of 257 videos

Consciousness in Biological and Artificial Brains

July 10, 2015 | Christof KochHuman and non-human animals not only act in the world but are capable of conscious experience. That is, it feels like something to have a brain and be cold, angry or see red. I will discuss the scientific progress that has been achieved over the past decades in characterizing the behavioral and the neuronal…

Machine Learning with Humans In-the-Loop

April 21, 2015 | Karthik RamanIn this talk I discuss the challenges of learning from data that results from human behavior. I will present new machine learning models and algorithms that explicitly account for the human decision making process and factors underlying it such as human expertise, skills and needs. The talk will also explore how…

Bring Your Own Model: Model-Agnostic Improvements in NLP

April 7, 2015 | Dani YogatamaThe majority of NLP research focuses on improving NLP systems by designing better model classes (e.g., non-linear models, latent variable models). In this talk, I will describe a complementary approach based on incorporation of linguistic bias and optimization of text representations that is applicable to several…

Going Beyond Fact-Based Question Answering

April 7, 2015 | Erik T. MuellerTo solve the AI problem, we need to develop systems that go beyond answering fact-based questions. Watson has been hugely successful at answering fact-based questions, but to solve hard AI tasks like passing science tests and understanding narratives, we need to go beyond simple facts. In this talk, I discuss how…

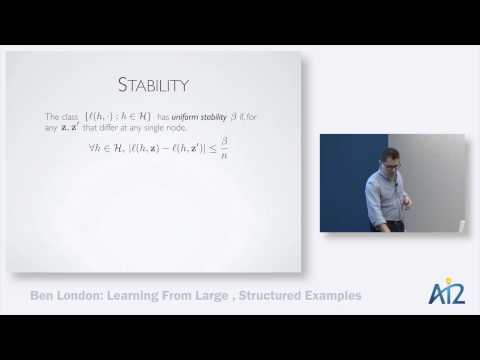

Learning from Large, Structured Examples

March 31, 2015 |In many real-world applications of AI and machine learning, such as natural language processing, computer vision and knowledge base construction, data sources possess a natural internal structure, which can be exploited to improve predictive accuracy. Sometimes the structure can be very large, containing many…

Distantly Supervised Information Extraction Using Bootstrapped Patterns

March 27, 2015 | Sonal GuptaAlthough most work in information extraction (IE) focuses on tasks that have abundant training data, in practice, many IE problems do not have any supervised training data. State-of-the-art supervised techniques like conditional random fields are impractical for such real world applications because: (1) they…

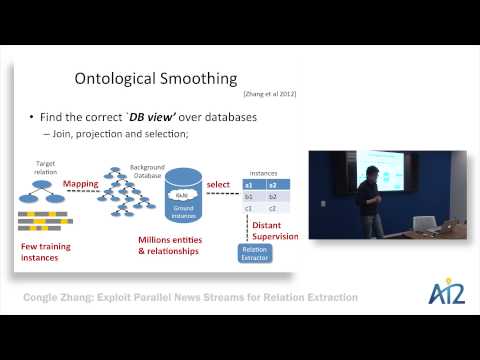

Exploiting Parallel News Streams for Relation Extraction

March 17, 2015 | Congle ZhangMost approaches to relation extraction, the task of extracting ground facts from natural language text, are based on machine learning and thus starved by scarce training data. Manual annotation is too expensive to scale to a comprehensive set of relations. Distant supervision, which automatically creates training…

Language and Perceptual Categorization in Computer Vision

March 12, 2015 | Vicente OrdonezRecently, there has been great progress in both computer vision and natural language processing in representing and recognizing semantic units like objects, attributes, named entities, or constituents. These advances provide opportunities to create systems able to interpret and describe the visual world using…

Learning and Sampling Scalable Graph Models

March 11, 2015 | Joel PfeifferNetworks provide an effective representation to model many real-world domains, with edges (e.g., friendships, citations, hyperlinks) representing relationships between items (e.g., individuals, papers, webpages). By understanding common network features, we can develop models of the distribution from which the…

Spectral Probabilistic Modeling and Applications to Natural Language Processing

March 3, 2015 | Ankur ParikhBeing able to effectively model latent structure in data is a key challenge in modern AI research, particularly in Natural Language Processing (NLP) where it is crucial to discover and leverage syntactic and semantic relationships that may not be explicitly annotated in the training set. Unfortunately, while…